Inside the mind of an optimizer

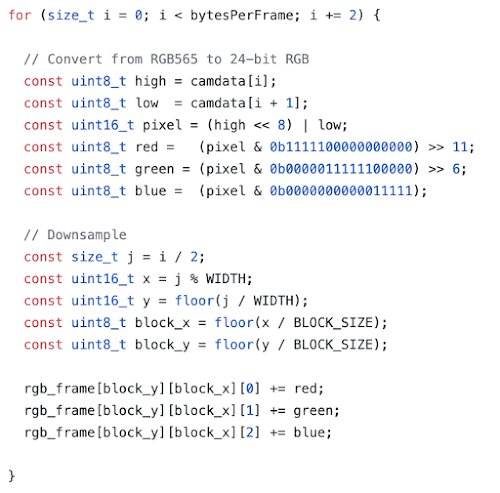

In this post I'm going to walk through my thought process when I examine a piece of code. By explaining each of my changes, hopefully I can reveal some info that will be useful to programmers who don't normally focus on performance. This is the code I'm going to pick apart. It's an image downsample loop from an Arduino sample project that uses machine learning to recognize images from a VGA camera. The requested camera image is QVGA (320x240) resolution and will be downsampled to 10x8 by this loop to make it more manageable for the Arduino Nano 33 BLE to process.

Some background

- bytesPerFrame - holds the value 320x240x2 since each pixel (of type RGB565) occupies 2 bytes.

- camdata[] - the uint8_t buffer holding the image

- rgb_frame[][][] - a 3-dimensional array which will hold the downsampled image

- WIDTH is a constant containing 320

- BLOCK_SIZE is a constant containing 30 (each 30x30 block of pixels from the original image becomes one pixel in rgb_frame[])

What I See

The loop treats the image as a 1-dimensional block of memory and calculates the x, and y values of the original image by dividing the current offset (variable i) by WIDTH. These x and y values are then divided again by BLOCK_SIZE to get the final x and y values used in the rgb_frame[] array.

How I interpret it

For performance considerations, the most efficient code needs to be in the inner most loop since it gets executed the most frequently. In the code above, there is only a single loop and it gets executed for every pixel. I don't need a profiler to find the inefficiency in that code. Here is what stands out to me immediately:

- Division (both integer and floating point) is a slow operation on any CPU. Having 4 divides (modulus uses division to get the remainder) in the inner loop is using a lot of CPU cycles. If the CPU doesn't have a native divide instruction (e.g. AVR), divides can take a heavy toll on performance

- Accessing 16-bit values one byte at a time and combining them is more expensive than reading 16-bits and swapping the byte order (needed in this case because the CPU is little-endian and the camera is writing big-endian data)

- Summing the r/g/b values into a memory array already sends up a big red flag. Read/modify/write operations are always a performance problem on pipelined architectures because the pipeline will stall waiting for the read to complete and then stall again waiting for the results of the addition before finally being written. This issue is less detrimental on embedded systems since the memory speed is usually the same or similar to the CPU execution speed. On a PC or mobile, this can cause significant performance problems

- I actually found a bug in the logic - the calculation of the downsampled x value will overflow since it was designed for a range values of 0 through 9. When working on pixels in the source bitmap at x values between 300 and 319, the x value for the destination array will be 10 (unintentionally overwriting other data)

- Minor nitpick - the comment at the beginning of the loop is misleading since RGB24 is not being generated by the code and in fact, separated RGB555 is what is being extracted from the RGB565 pixels

Tip - What are register variables? What are CPU registers?

Before I get into the specifics of my changes, it's important to have an understanding of some of the things going on under the hood of your CPU. If you're already familiar with CPU registers and how the C compiler manages variables you can skip this, otherwise I suggest you look at a good Wikipedia article which clarifies the subject.

Next Steps

Below is my first pass at optimizing that code. I've reorganized the loops to avoid using division. By looping over the x and y (0..29, 0..29) of each source block as the inner loops, I can keep the r/g/b sums in 3 local (hopefully register) variables. The outer x/y loop is over the downsampled values (0..9, 0..7). I read the pixel data 16-bits at a time and you can see that I'm making use of a gcc intrinsic (__builtin_bswap16). The compiler will replace this intrinsic with a single BSWAP16 instruction. This type of instruction is present on most modern CPUs and if not, gcc will emit the necessary code to implement it. For the target MCU of this project (Cortex-M4F), the instruction is available.

I measured the time to execute this code on the nRF52840 (Arduino Nano 33 BLE) and it runs about 4x faster than the original version. My changes so far haven't really interfered with the readability of the code. I haven't made use of SIMD instructions (there aren't any useful ones for this task on the Cortex-M4) and I haven't resorted to writing assembly language, but there are still more changes that can speed this up.

Uglification

This next set of changes I call the 'uglification' stage. The most effective optimization takes place at the algorithm level followed by the implementation in C. If you've worked on the algorithm and the C code and still need to get more speed, you end up at the uglification stage. At this stage, improvements to the performance usually involve making changes which decrease readability and maintainability. This might mean writing target-specific code with intrinsics or assembly language. It may also mean using lots of #ifdef's for code which does things like accessing hardware directly (e.g. AVR GPIO ports) instead of using an abstraction layer. If you're writing time-critical code where a 1% speedup is considered 'useful', then it would be appropriate to make use of a profiler and tweak the code to get the best output. At this point, it's very helpful to have detailed knowledge of your target CPU architecture, instruction set and memory subsystem.

For my next change, I made use of some knowledge about the CPU (it has 32-bit registers). This is helpful because we're summing 16-bit values (the r/g/b of each pixel). The values of each r/g/b component are reduced to 5-bits each, so we can safely sum up to 11 bits worth (2048) of them before overflowing 16-bits. This is fortuitous because the downsampling block size (30x30) is 900 values to sum. With this knowledge, we can do 2 sums at a time, making use of the upper and lower 16-bits of the 32-bit register as if they're independent. The reason we can do this is because of the way binary addition works. In the example below, 2 32-bit numbers are added together:

0x00250015 +

0x01010006

---------------

0x0126001B

As you can see, as long as we don't overflow 16 bits, the upper and lower halves can operate 'independently' as far as doing addition. Here's what the code looks like:

The source pointer is cast as a uint32_t* and a pair of pixels are read (and byte reversed). The BSWAP32 actually reverses the left and right pixel of the pair too, but since we're summing everything together it doesn't affect the outcome. Next I mask off each pair of color stimuli and shift down into the lower 5 bits. After summing all of the pixels in the block, I need to do a final step to sum the upper and lower halves of my 3 sum variables. This code was measured to run 9x faster than the original. The original code specified the rgb_frame[] as type int16_t, so we're not adding any risk to the code by doing it this way, but if the BLOCK_SIZE got too big, it would overflow in the original code too. Was it worth it? That question can only be answered by the person in charge of the project. In this case, we doubled the speed and made the code a bit less readable.

Wrap Up

I've always been focused on performance. I have a good grasp of computer architecture and how C code gets translated into CPU instructions. With many years of experience, I can easily spot code that's spending too many cycles for the job at hand. My aim for this post was to reveal some of the details that many programmers overlook and to show what a dramatic effect they can have on performance. It's not reasonable to ask every programmer to fill their head with trivia about CPU architectures, instruction sets and the workings of the compiler. Hopefully I've shared enough info to stir the curiosity of budding optimizers to explore this topic further and for everyone else to have a more critical eye when you see code.

To follow my daily project activities, the best place to look is on my Twitter Feed.

Comments

Post a Comment