A 'Big Memory' GIF Decoder

Intro

In a previous article, I wrote about my low memory GIF decoder and how I optimized it to run on embedded/Arduino devices. For this article, we're going in the opposite direction. What if you had all the memory you needed, what could be done to speed up LZW decoding? I asked myself this question many years ago and found some ideas that I refined and then optimized further.

The Traditional Way

The basis of LZW compression is that a dictionary of repeated strings is built dynamically as you encode and decode the code stream. In the traditional implementation, this dictionary is a data structure with a linked-list connecting each color/symbol to the previous in order to represent the different repeating patterns that are compressed in the data stream. When a codeword is encountered that represents a 'string' of colors/symbols, the output is generating by walking down the linked-list. The list is generated in reverse order (the last entry points to the previous), so the pixels must be written to a temporary spot in memory and then the order reversed when written to the output image. When I profiled my decoder and others, this is where most of the time is spent - walking the linked list of strings in the dictionary. The perf killer for "big" CPUs isn't just all of the memory accesses, but the fact the the linked-list indices must be immediately used after being read. Here's a look at the inner loop of the part of the decoder which outputs the pixels for compressed codes:

The 2 perf problems with this technique are that the codes must be written to a temporary place in memory in reverse order (see the --s) and that the next link in the list (code) is read and immediately used in the next iteration; this causes pipeline stalls even if the whole dictionary is in the L0 cache (which should be the case on modern CPUs). So the question becomes, how do we overcome these two problems and speed up decode?

A small improvement

One technique I've seen people use to solve half of this problem is to keep another array with the current lengths of each string in the dictionary. This solves a problem and creates a new one. By knowing the length of the string ahead of time, the output stage can write the pixels directly to the output image (in reverse order) instead of writing them to a temporary spot and copying them back in reverse order. This also creates a new perf problem - the length of each string must be constantly updated after each symbol is added to it in the dictionary; this slightly reduces the benefit of knowing the string lengths.

The New Way

A novel idea I found in my search was to use the output image as the dictionary. This makes sense because the strings of symbols are built up dynamically as the image is decoded - all of the compressed data comes from existing data. This idea couldn't be used in the original giflib because it goes against the "streaming" nature of that code. In the 1980s, many home computers had only a few K bytes of RAM and received their content over slow dial up modems. The GIF libraries at the time needed to work within those constraints and display data as it arrived for fear of delays, corruption or the connection dropping. Modern computers (even embedded MCUs) have a lot more RAM and open up new possibilities for making the decoder more efficient.

In order to use the output image as a more efficient dictionary, it's still necessary to have a similar set of dynamic tables which keep track of new and existing symbols, but instead of holding a linked list of pixels, they can now hold onto a list of offsets to the start of each string along with its length. When a codeword is decoded into pixels, the result can be a simple memcpy() from source offset to current output offset. It's a little more complex than that because of the way LZW works - pixels are emitted for every symbol decoded, but a new pixel will be added to the end of the string when a new code is formed. Here's a snippet of code used in the more efficient decoder:

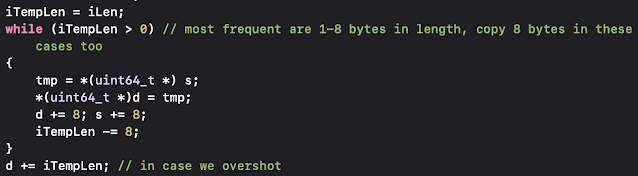

Below this code would normally be a call to memcpy() to copy iLen bytes from *s to *d, but that would not be the fastest way to go. If you are familiar with memcpy(), you know that it is a complex function which is highly optimized for the target CPU because it's used by so many programs in many places. The only downside to calling memcpy() is when the number of bytes to copy is small. There is inherent overhead in the call/return part of using code from the C runtime library, but beyond that, memcpy() tests the byte alignment of the source and destination pointers as well as the requested copy length to see how many bytes (e.g. in SIMD registers) can be moved at a time. I've tested the speed of memcpy() on various machines and I've seen a common cutoff length of about 128 bytes where the benefit of using memcpy() outweighs doing your own copy loop. For GIF decoding, the majority of strings are only a few symbols long, so using memcpy() doesn't make sense. The majority of GIF codes tend to be less than 4 symbols long. There's also the fact that writing a byte at a time is slower than writing the native word size, but that creates potential unaligned write and buffer boundary issues. For x64 and aarch64, memory alignment is not a problem. In my library, I allocate the output buffer a little larger than necessary to solve the potential overwrite problem. Here's my solution for a much faster memcpy() tailored to GIF output:

For short runs, it's much faster than memcpy() and faster than copying one byte at a time. For long runs, it compares well to memcpy(). The method outlined above will outpace the old algorithm even more for longer runs of pixels since it just has to copy them and not walk a linked-list for every pixel. This means that files which are compressed well (have a high compression ratio) will decode much faster than the traditional algorithm.

How does it stack up?

In my 'small memory GIF' blog post, I described the benefits of de-chunking the compressed data from the GIF file, efficiently reading it from the file and holding codes in the largest (register) sized variable possible. My 'small memory' decoder uses the same decode method as traditional LZW decoders and is about twice as fast as giflib. With the improved algorithm described above, images with a low compression ratio (lots of small groupings of unique colors) decode about 5x faster than giflib. For well compressed images, the speed advantage can be greater than 20x faster than giflib. I'm sure that anyone working with GIF images would appreciate that big improvement in speed in exchange for using more memory. I decided that the best way to have a positive impact with this code is to release it as a drop-in replacement for giflib. That way it will be painless for anyone to swap out the slower code for my faster version. I decided to name my project giflib-turbo, similar to libjpeg-turbo. You can find the Github repo here:

https://github.com/bitbank2/giflib-turbo

It will take a while to be adopted by large stake holders, but my goal for this code is to have a positive impact on usability and environmental (energy) impact. giflib is ancient, but it's still being actively used by billions of people daily - nearly every web browser, mobile device and plenty of embedded devices still use it.

As always, please feel free to leave a comment below. I'd like to hear about any projects which make use of this code.

Comments

Post a Comment